Embeddings Nearest Neighbors Learn Stuff

Overview

Here is what we are trying to do

The UI is up to you. But basically we want to shorten the time it takes for someone to find a product with specific attributes by allowing them to highlight regions of the product that they do not like.

This boils down to two components;

- Interactive Frontend: which presents products and returns bitmask of the highlighted pixel region.

- ML Backend: a nearest neighbors algorithm that tries to find products that are similar to the masked image and dissimilar (in the targetted region) to the original image –but appear as neighbors to both. If multiple feedbacks are given, we can also interpolate away from products with disliked regions.

Hypothesis

Should allow users to navigate dense regions of product catalogs more effectively by supplying visual feedback. We assume most people navigate through rec slates and search engine results, which are biased towards previously purchased or highly similar products.

Dataset

NK will provide images and basic metadata (names, links, ect..) on ecommerce products

Getting Started

Environment Setup

You are going to need some ML libraries, here are the steps to spin up a virtual env and install things through the python package manager (pip).

- create your virtual environment:

$: pyhton3 -m venv ./choosen_name

(chosen_name)$: - install some stuff you will need (remove ‘-gpu’ if on laptop): $: source ./chosen_name/bin/activate

(chosen_name)$: pip install tensorflow-gpu

(chosen_name)$: pip install keras

(chosen_name)$: pip install cloudpickle

(chosen_name)$: pip install nmslib- activate your environment whenever you need to run scripts

Frontend Requirements

Here is a list of things that the frontend needs to do. I don’t have any code for this part so you will have to own the front end and figure everything out yourself. You might look into Flask or Django for Python web frameworks –Flask you can get running with in a few hours, basically lets you inject Python variables into HTML templates (which can also run JS, I believe).

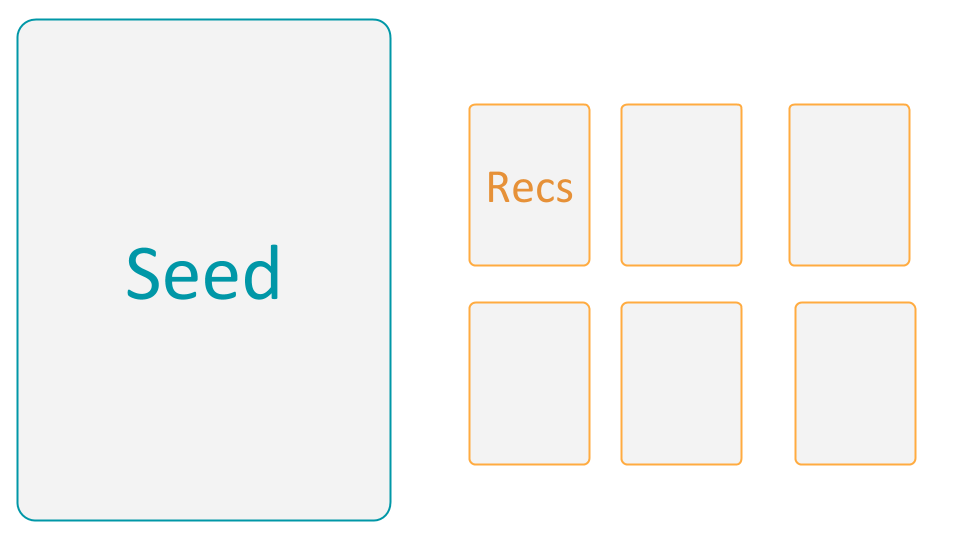

- present an enlarged image of “seed” product

- seed product image is highlightable

- also present N recommended (similar) “rec” product images

- if a user clicks on a rec image, it becomes the new seed product (compute new recs)

- if a user highlights a region of the seed image, the rec slate is updated

Bonus/challenge features (if you get bored/have time):

- on:hover of rec image, compute and display the similar recs to that product in a popout window

ML Component

Embeddings Nearest Neighbors Learn Stuff

On a high level, we are going to turn images into vectors and them compute distances between

the vectors (aka; figure out the neighbors).

For this you have to implement 3 things: an embedding model, approximate nearest neighbors,

and then the logic for averaging the embeddings, gluing everything together, ect…

I have provided sample code for obtaining embeddings through a pre-trained neural network,

and for obtaining aproximate nearest neighbors within the embedding space.